Facebook is ramping up both its human response efforts for suicide threats or high-risk postings, and its use of artificial intelligence to identify such risks, the company wrote in a new blog post yesterday.

Facebook is ramping up both its human response efforts for suicide threats or high-risk postings, and its use of artificial intelligence to identify such risks, the company wrote in a new blog post yesterday.

"Over the last month, we’ve worked with first responders on over 100 wellness checks based on reports we received via our proactive detection efforts," VP of Product Management Guy Rosen wrote in a blog post. "This is in addition to reports we received from people in the Facebook community. We also use pattern recognition to help accelerate the most concerning reports. We’ve found these accelerated reports— that we have signaled require immediate attention —are escalated to local authorities twice as quickly as other reports. We are committed to continuing to invest in pattern recognition technology to better serve our community."

The social network has been working on the issue for more than 10 years, but started employing artificial intelligence in March. Facebook uses pattern recognition to find posts where either the content or the comments match a pattern for suicide risk (for instance, a high volume of comments like “Are you ok?” and “Can I help?”). It also reviews the comments and likes on Facebook Live posts to flag a particular part of the video for human review.

As well as highlighting high-risk posts, the AI prioritizes those posts so that the Community Relations team (which includes thousands of people around the world, including dedicated self-harm specialists) addresses the most immediate danger first. AI also helps the team to quickly access contact information for local first responders if it's warranted. Rosen shared a video in which local police officers described how a call from Facebook likely saved a young girl's life.

The company is now rolling these features out beyond the United States, with the goal of deploying them worldwide, excluding the EU.

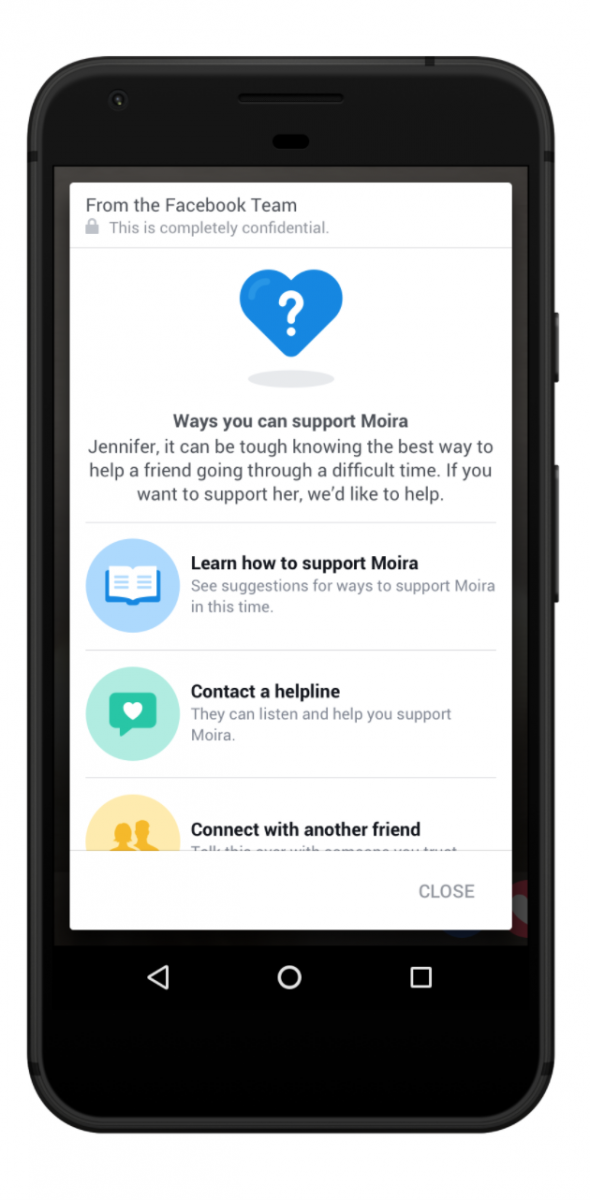

In addition to contacting first responders when the situation warrants it, many of Facebook's suicide prevention features have focused on making resources available to the poster and encouraging friends who report a post to reach out.

"We provide people with a number of support options, such as the option to reach out to a friend and even offer suggested text templates," Rosen wrote. "We also suggest contacting a help line and offer other tips and resources for people to help themselves in that moment."