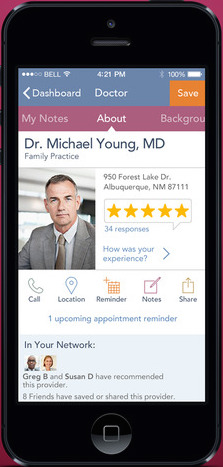

Healthgrades was one of the ratings looked at in the study.

Healthgrades was one of the ratings looked at in the study.

As payors increasingly consider giving patients price transparency tools to compare hospitals, there are also a number of groups looking to provide comparison tools for hospital quality. But a new study in Health Affairs shows that those comparison tools, even from seemingly reputable sources, are wildly inconsistent.

"To better understand differences in hospital ratings, we compared four national rating systems," study authors wrote in the abstract. "We designated 'high' and 'low' performers for each rating system and examined the overlap among rating systems and how hospital characteristics corresponded with performance on each. No hospital was rated as a high performer by all four national rating systems. Only 10 percent of the 844 hospitals rated as a high performer by one rating system were rated as a high performer by any of the other rating systems."

The study looked at U.S. News & World Report's Best Hospitals; Healthgrades' America's 100 Best Hospitals; Leapfrog's Hospital Safety Score; and Consumer Reports' Health Safety Score. Furthermore, Modern Healthcare reports, in 27 cases a hospital was nearly at the top of one list and towards the bottom of another.

The problem, the researchers concluded, is that we don't have a consistent and reliable definition of quality, and different rating systems focus on different metrics.

"The lack of agreement among the national hospital rating systems is likely explained by the fact that each system uses its own rating methods, has a different focus to its ratings, and stresses different measures of performance," they wrote.

Representatives from the ratings companies suggested to Modern Healthcare in interviews that this may not be a bad thing, as long as everyone is transparent about their ratings.

"If each hospital is good and bad at different things, then each patient will want to use the ratings that most directly address his or her individual needs,” Ben Harder, managing editor and director of healthcare analysis for U.S. News & World Report, told Modern Healthcare. “If just one rating system existed, they would have less information to use in choosing a provider.”

Of course, there is a benefit for hospitals to the inconsistency between lists: nobody has to end up at the bottom. At least not on all the lists.

At an Economist healthcare forum last fall, a panel focused on price transparency identified one of its biggest problems: Doctors who are afraid of showing up on the bottom of a ranked list.

After all, medical boards and licensing laws should theoretically mean that there are no bad doctors, at least not bad enough to be untrustworthy with patients’ lives. Doctors worry about what showing up on the bottom of a quality report — based on anything from subjective patient reviews, to aggregated patient outcomes, to error percentages — could mean for their practice and reputation.

Dave Mason, senior vice president at RelayHealth, said that fear is one of the roadblocks making it hard to get uniform quality comparison measures.

“You can bring payers and providers together, but we want to make it so the information is standard and people can make meaningful decisions. Take quality, make it uniform, and publish it,” he said. “We’ll work through it, but the problem is no one wants to be on the bottom of the list. So we have to finally find a way to get enough momentum and push it over, because after we do that we’ll then raise the bar.”