After building up the human component of their network of suicide prevention organizations, Facebook is now bringing in the machines: The social media company announced they have updated their suicide prevention tools with artificial intelligence to identification of those at risk as well as improve the reporting process and speed up response time.

After building up the human component of their network of suicide prevention organizations, Facebook is now bringing in the machines: The social media company announced they have updated their suicide prevention tools with artificial intelligence to identification of those at risk as well as improve the reporting process and speed up response time.

On a company blog post from Vanessa Callison-Burch, Jennifer Guadagno and Antigone Davis (Facebook’s Product Manager, Researcher, and Head of Global Safety, respectively) described key features of the updates, which include the integration of their prevention tools with Facebook Live and a testing phase of AI-powered pattern recognition to identify posts likely to include thoughts of suicide.

“Based on feedback from experts, we are testing a streamlined reporting process using pattern recognition in posts previously reported for suicide,” the company stated. “This artificial intelligence approach will make the option to report a post about ‘suicide or self injury’ more prominent for potentially concerning posts like these.”

As Facebook tests that tool, employees will review posts flagged by the software and provide resources if the situation calls for it, even if no one has reported the post yet.

“We are starting this limited test in the US and will continue working closely with suicide prevention experts to understand other ways we can use technology to help provide support,” the company wrote.

The company is also testing a feature that allows people to connect with Facebook’s crisis support partners (National Suicide Prevention Line, Crisis Text Line, and the National Eating Disorder Association) via Messenger, and Facebook plans to scale the service as organizations are able to adapt to changes in communication volume.

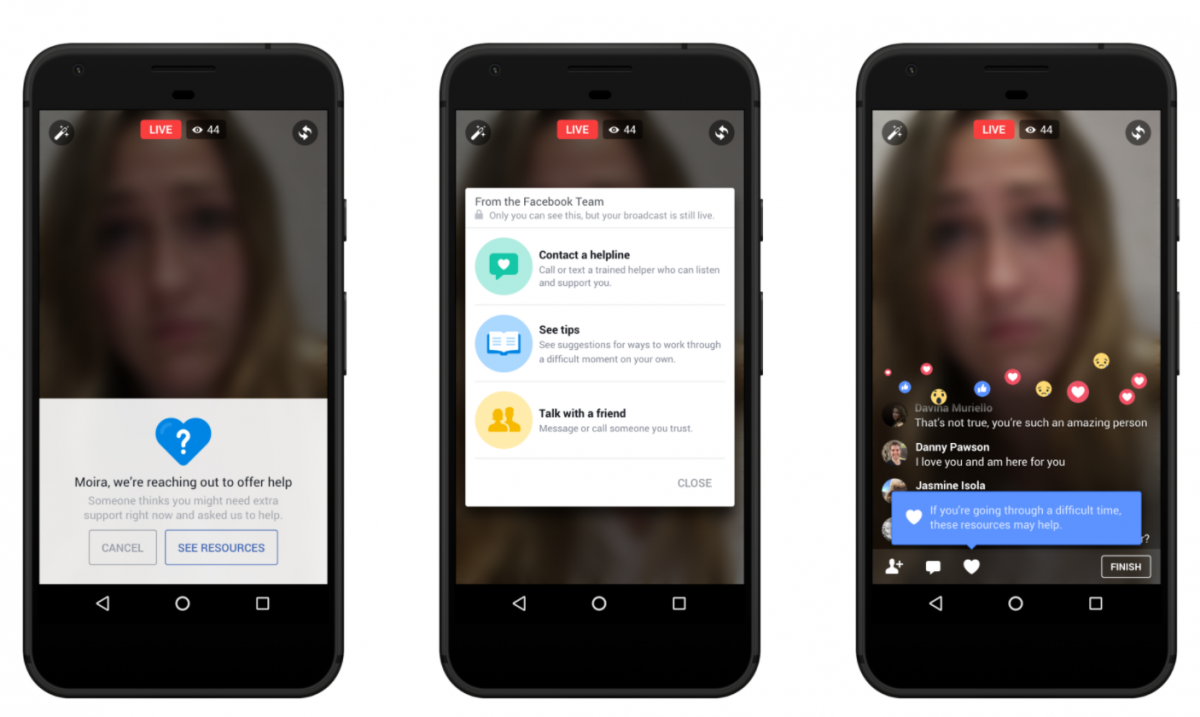

The Facebook Live integration is available now, and allows those who are watching a friend’s live video that concerns them to reach out to that friend directly and report the video to Facebook, which will then step in and guide the person reporting the video in helping their friend. Those who share a concerning live video will also see a set of resources on their screen.

Facebook has had suicide prevention resources in place for more than 10 years, which they developed over the years through partnerships with organizations including the National Suicide Prevention Lifeline, the Substance Abuse and Mental Health Services Administration and Save.org. Initially, these tools offered a way for people to report a friend’s post if they believed the content of that post expressed suicidal intentions. In 2015, they added to additional resources for the person who posted the concerning content by giving them the option to reach out to a friend along with encouragement to connect with a mental health expert at the Suicide Prevention Lifeline. Last year, Facebook expanded those options globally by making it possible for those reporting a post to reach out to that friend directly.

“There is one death by suicide in the world every 40 seconds, and suicide is the second leading cause of death for 15-29 year olds. Experts say that one of the best ways to prevent suicide is for those in distress to hear from people who care about them,” the company wrote. “Facebook is in a unique position — through friendships on the site — to help connect a person in distress with people who can support them. It’s part of our ongoing effort to help build a safe community on and off Facebook.”