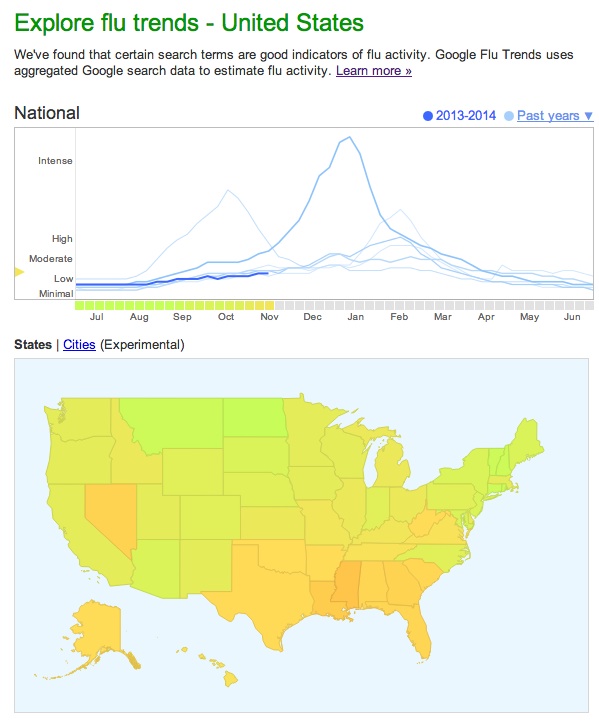

Many people know that Google Flu Trends massively inaccurately predicted the number of flu cases at the end of 2012, as first reported by Nature Magazine's Decan Butler. A group of Northeastern University professors just published a pair of papers, however, that suggest the problems with Flu Trends are much more wide-reaching and systemic than a single glitch -- and they believe there's a lesson here for future big data endeavors.

Many people know that Google Flu Trends massively inaccurately predicted the number of flu cases at the end of 2012, as first reported by Nature Magazine's Decan Butler. A group of Northeastern University professors just published a pair of papers, however, that suggest the problems with Flu Trends are much more wide-reaching and systemic than a single glitch -- and they believe there's a lesson here for future big data endeavors.

"There was a news article which noted that Google Flu Trends had missed last season by quite a lot, over 100 percent," David Lazer, Professor in Political Science and Computer and Information Science at Northeastern University, told MobiHealthNews. "Given the initial version was purporting to be within a few points of the CDC data ahead of time, going from two or three percentage points to 103 percentage points off was rather stunning."

Lazer and co-researcher Ryan Kennedy, a visiting professor at Northeastern, started looking closer at recent Google Flu Trends data and discovered that the inaccuracy Nature reported on was not an aberration, but part of a trend of consistent inaccuracy.

"So we started digging deeper and deeper and it got more and more puzzling," Lazer said. "It was clear that it went off the rails years ago. Nobody at Google noticed. It was just like a boat where all the crew had died or something like that. It was just sort of floating around."

Lazer and Kennedy, whose two papers were recently published in Science Magazine and the Social Science Research Network, also took issue with the popular theory -- reported in Nature's piece originally -- that it was a media-fueled flu hysteria that threw Google Flu Trends off.

"What the storyline is, is that there was a broad amount of news coverage in that particular flu year and you had a bunch of people that were searching because of the media-stoked panic," said Kennedy. "But we also see them missing when there wasn't a media-stoked panic. There's also the issue that they did pretty darn well in the swine flu and bird flu epidemic, which to my memory sparked a much, much larger media panic."

"What the storyline is, is that there was a broad amount of news coverage in that particular flu year and you had a bunch of people that were searching because of the media-stoked panic," said Kennedy. "But we also see them missing when there wasn't a media-stoked panic. There's also the issue that they did pretty darn well in the swine flu and bird flu epidemic, which to my memory sparked a much, much larger media panic."

"Without a much more rigorous analysis, I wouldn't claim this as the definitive answer," Lazer added. "On the other hand, frankly, I think our superficial analysis beats their superficial analysis."

Lazer and Kennedy's analysis is that changes in Google's search algorithm, which the company is constantly and secretly iterating, rendered the original modeling Flu Trends was based on less and less accurate. More importantly, there was no system in place for the algorithms to calibrate themselves. The researchers demonstrated that just by combining the CDC and Google datasets, they were able to predict flu trends more accurately than the CDC.

"It's possible to have a fairly accurate thing like Flu Trends, but you have to build in the assumption that there will be model drift," Lazer said. "If you build your model so it's recalibrated regularly, you'll do fine. You won't do perfect, but you're doing well."

The other problem with Flu Trends, Lazar and Kennedy contend, is that the company's proprietary search algorithms prevent them from truly participating in scientific dialogue. Google actually published three papers about Flu Trends over the years, but Kennedy said the company did not respond to requests for clarifications in methodology, like an academic researcher would, something he finds "deeply troubling" for a company publishing scientific papers.

As well as adding recalibration, the researchers hope another lesson will be for companies to find ways to be more transparent about data that's salient to scientific claims. For instance, Lazar thinks there might not be too much harm for Google in releasing information about their algorithm with a two year lag, given how quickly their algorithm changes. He hopes to start a dialogue with companies and corporations about creative solutions for data sharing that would support both scientific interests and the companies' business interests.

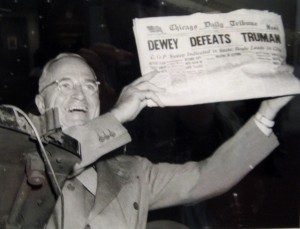

Lazar describes the fall of Flu Trends as a "Dewey versus Truman moment" for big data, referring to the 1948 American presidential election where exit polls famously predicted the wrong winner.

"Survey research did just fine after that, but it did require some soul searching about why this happened," said Lazar. "It required some thought. Just because you're doing something with big data doesn't mean you're doing it well. Generally with anything you're doing, you could do well or you can do badly. This case proves you can do big data badly. It doesn't prove at all that big data doesn't offer a value."